Ross Anderson Discusses AI Model Collapse as a Growing Problem in Online Content

In Brief

Ross Anderson warns of the potential risks associated with the intellectual decline of future generations of large language models (LLMs), which have become the primary tool for editing and creating new texts.

Model collapse can be prevented by understanding and mitigating the risks associated with generative AI.

In just six months, generative AI has made significant strides, capturing the attention of the world. ChatGPT, a prominent example of this technology, quickly gained popularity and widespread use. However, renowned expert Ross Anderson warns about the potential risks associated with the intellectual decline of future generations of models.

Ross Anderson is a pioneer in safety engineering and a leading authority in finding vulnerabilities in security systems and algorithms. As a Fellow of the Royal Academy of Engineering and a Professor at the University of Cambridge, he has contributed extensively to the field of information security, shaping threat models across various sectors.

Now, Anderson raises the alarm about a global threat to humanity—the collapse of large language models (LLMs). Until recently, most of the text on the internet was generated by humans. LLMs have now become the primary tool for editing and creating new texts, replacing human-generated content.

This shift raises important questions: Where will this trend lead, and what will happen when LLMs dominate the internet? The implications go beyond text alone. For instance, if a musical model is trained with compositions by Mozart, subsequent generations may lack the brilliance of the original and produce inferior results, comparable to a musical “Salieri.” With each subsequent generation, the risk of declining quality and intelligence increases.

This concept may remind you of the movie “Multiplicity,” starring Michael Keaton, where cloning leads to a decline in intelligence and an increase in the stupidity of each subsequent clone.

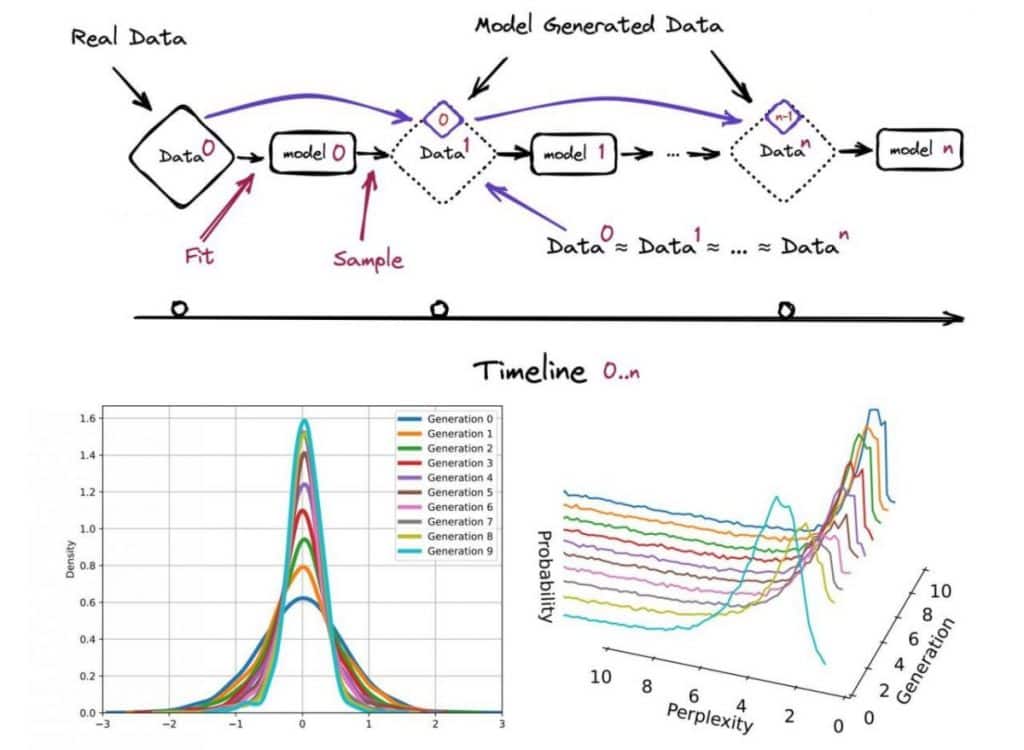

The same phenomenon can occur with LLMs. Training a model with model-generated content leads to irreversible defects and the degradation of text quality over generations. The original distribution of content becomes distorted, resulting in an influx of nonsensical information. Gaussian distributions converge, and in extreme cases, text may become meaningless. Subsequent generations may even misperceive reality based on the mistakes made by their predecessors. This phenomenon is known as “model collapse.”

The consequences of model collapse are significant:

✔️ The internet becomes increasingly filled with nonsensical content.

✔️ Individuals consuming this content may unwittingly become less informed and lose intellectual capabilities.

Fortunately, there is hope. Anderson suggests that model collapse can be prevented, offering a glimmer of optimism amid the concerns. To learn more about potential solutions and how to avoid the decline of online information quality, we encourage you to explore further.

While generative AI holds promise, it is essential to remain vigilant and address the challenges it presents. By understanding and mitigating the risks associated with model collapse, we can work towards harnessing the benefits of this technology while preserving the integrity of information on the internet.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.