GPT-4 Can Handle Your Requests for Images, Documents, Diagrams, and Screenshots

In Brief

GPT-4 can handle requests for images, documents, diagrams, and screenshots. It’s an improvement over GPT-3, which only handled text.

GPT-4 has superior performance in various exams and tests and can access additional information and details through images that may not be available in written form.

OpenAI’s latest milestone, the new model GPT-4, can accept requests that include images, documents with text, diagrams, or screenshots as inputs. This represents a significant improvement over the previous version, GPT-3, which could only understand and output text. With this new feature, GPT-4 generates text outputs given inputs consisting of interspersed text and images.

“Over a range of domains—including documents with text and photographs, diagrams, or screenshots—GPT-4 exhibits similar capabilities as it does on text-only inputs,”

OpenAI wrote.

ChatGPT-4 has a greater size than its predecessors, indicating that it has undergone training on a larger amount of data and contains more weights in its model file, resulting in a higher cost for its operation. The newest AI language can generate human-like text by using deep learning and being pre-trained on a large dataset.

GPT-4 has demonstrated superior performance over other AI languages in a variety of exams and tests due in part to its ability to access additional information and details through images that may not be available in a written form.

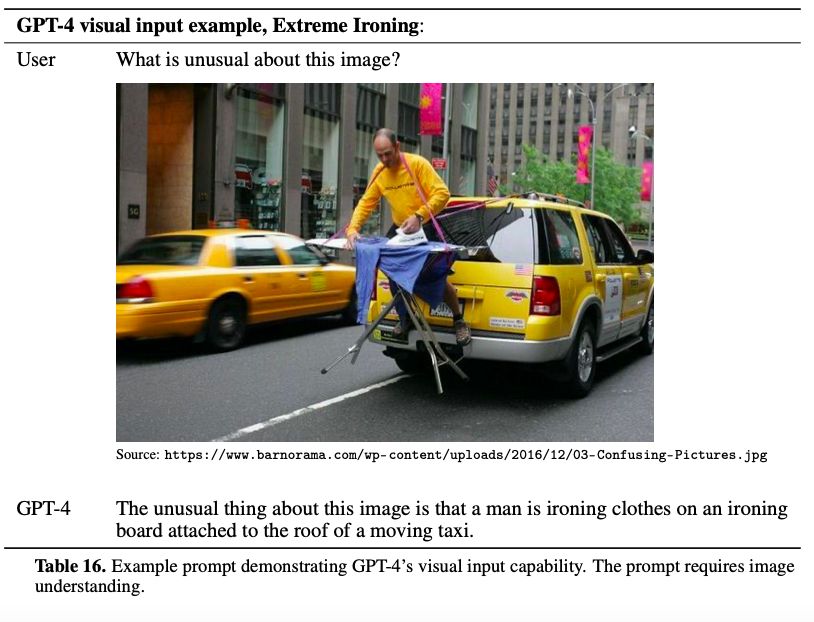

The new GPT-4 model can tell you what exactly is depicted in the illustration, analyze it, and even explain its meaning. In the demo, GPT-4 explained the visual joke where a VGA cable is connected to the iPhone. It could also explain what is unusual in a picture presenting “extreme ironing,” which you can check out below.

However, there are also more useful implications to GPT-4’s newfound knowledge. In the presentation, it was shown that PGT-4 could tell what could be cooked from the ingredients shown in the picture. This means the model can help you cook if you have food products and no clue what to do with them. Take a snapshot of the food you have, and Chat-GPT can tell you what you can prepare from the ingredients that you have at home.

This ability to understand and interpret visual information makes GPT-4 a powerful tool for tasks such as image captioning, visual question answering, and even content creation. With the integration of both text and visual understanding, GPT-4 has the potential to revolutionize various industries, such as advertising, design, and e-commerce, and help people do the boring, mundane tasks for them.

The advanced language model also ‘understands’ screenshots and documents with text, tables, diagrams, or other visual representations. For instance, if you upload a three-page research paper and need it summarized and explained, GPT-4 is capable of doing so.

Bloomberg’s anchor Jon Erlichman demonstrated how he was able to transform a hand-sketched design into a functional website.

The new technology can also be used as a mobility aid as it could be used to describe the environment for visually impaired people. To this end, Open AI has already partnered with an application called Be My Eyes which has been designed to give blind people a helping hand when they need to have a look at something, for instance, while grocery shopping. The app lets “sighted volunteers and professionals lend their eyes to solve tasks big and small to assist blind and low-vision people lead more independent lives.” Now, it also offers a virtual volunteer tool powered by OpenAI’s GPT-4.

Although OpenAI’s GPT-4 currently offers the ability to process text and images as inputs, the model is not yet equipped to handle audio and video inputs. Nevertheless, there are indications that these modalities might be included in the next iteration of the technology.

Read more:

- Top 7 Companies That Adopted GPT-4

- GPT-4-Based ChatGPT Outperforms GPT-3 by a Factor of 570

- Microsoft Confirms Bing Runs on the Advanced GPT-4 Model

- GPT-4 vs. GPT-3: What Does the New Model Have to Offer?

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Agne is a journalist who covers the latest trends and developments in the metaverse, AI, and Web3 industries for the Metaverse Post. Her passion for storytelling has led her to conduct numerous interviews with experts in these fields, always seeking to uncover exciting and engaging stories. Agne holds a Bachelor’s degree in literature and has an extensive background in writing about a wide range of topics including travel, art, and culture. She has also volunteered as an editor for the animal rights organization, where she helped raise awareness about animal welfare issues. Contact her on agnec@mpost.io.

More articles

Agne is a journalist who covers the latest trends and developments in the metaverse, AI, and Web3 industries for the Metaverse Post. Her passion for storytelling has led her to conduct numerous interviews with experts in these fields, always seeking to uncover exciting and engaging stories. Agne holds a Bachelor’s degree in literature and has an extensive background in writing about a wide range of topics including travel, art, and culture. She has also volunteered as an editor for the animal rights organization, where she helped raise awareness about animal welfare issues. Contact her on agnec@mpost.io.