OpenAI: New Process-Supervised Reward Modeling Improves AI Reasoning

In Brief

OpenAI’s process-supervised reward modeling (PRM) aims to evaluate the intermediate steps and reasoning of AI models, leading to improved performance and metrics.

OpenAI has once again captured the attention of the AI community with their groundbreaking work in process-supervised reward modeling (PRMs). This innovative approach aims to evaluate the intermediate steps and reasoning of AI models, leading to improved performance and metrics.

In traditional reinforcement learning from human feedback (RLHF), model feedback is typically given based on the overall result generated by the model. However, OpenAI’s new research explores the idea of evaluating the individual steps and reasoning processes undertaken by the model. By doing so, they can provide more fine-grained assessments and feedback.

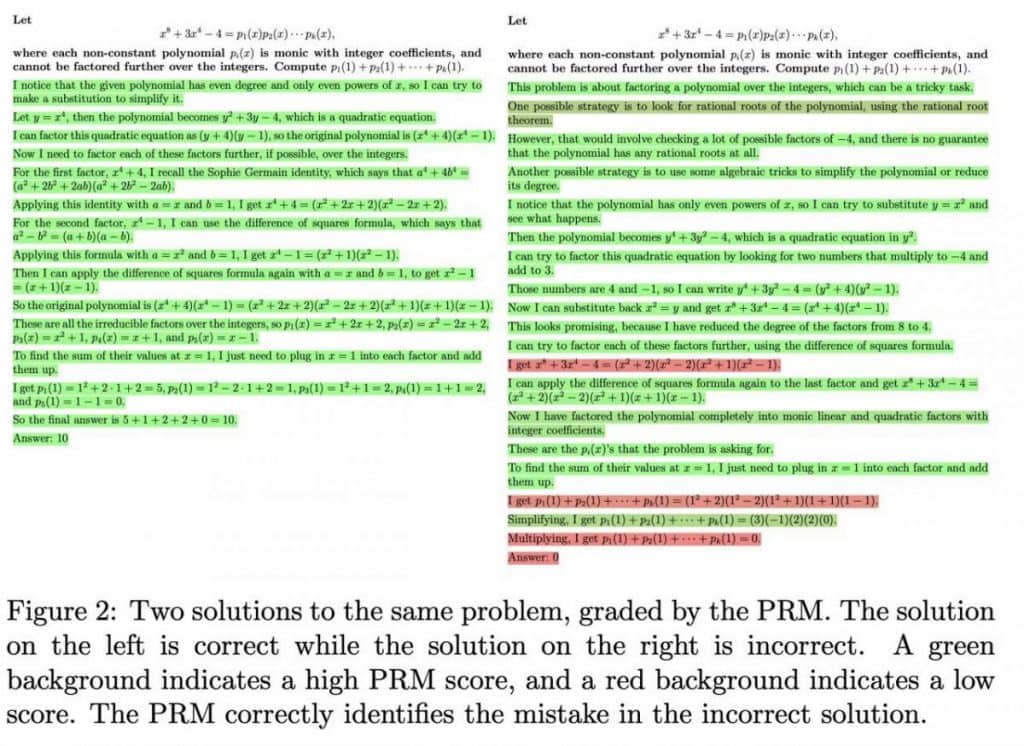

To tackle this problem, OpenAI selected mathematical problems that required multiple actions. A separate model was trained to effectively evaluate the intermediate steps, acting as a critic to identify any erroneous judgments made by the primary model. This process not only enhances the overall performance but also improves the metrics used to assess the model’s capabilities.

OpenAI has made significant strides in this area, with the release of a meticulously curated dataset consisting of 800,000 marked judgments. Each judgment represents a separate stage in solving mathematical problems and was manually created. This highlights the level of dedication and resources OpenAI invests in developing high-quality datasets, raising questions about the volume of data collected for other domains such as programming or open-ended questions.

The training of GPT-4, OpenAI’s latest iteration of the GPT series, is already well underway. While the RLHF component is not incorporated in the current experiments, a pure language model is utilized. Notably, OpenAI mentions that there are multiple versions of GPT-4, with even the smallest version requiring significantly fewer resources for training—approximately 200 times less.

An intriguing example shared by OpenAI showcases how the model evaluates each individual decision step. In a screenshot included in the post, errors in the solution are flagged and given the lowest correctness score, highlighted in red. This demonstration highlights the model’s ability to reason and provides valuable insights into its decision-making process. OpenAI has also provided instructions for markups, offering opportunities for crowdsourcers to contribute and benefit from their work.

As OpenAI continues to push the boundaries of AI research, their focus on model reasoning and process-supervised reward modeling brings new possibilities for enhanced AI capabilities. This latest breakthrough showcases their commitment to improving model performance and opens doors to further advancements in the field.

- Recently, Apple reportedly restricts employees’ use of ChatGPT and other AI-powered chatbots due to privacy concerns. The Wall Street Journal reported that workers are also restricted from using GitHub’s AI tool Copilot, which enables users to automatically write software code. ChatGPT is an AI-powered chatbot developed by OpenAI, which has been criticized for privacy violations.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.