Meta Introduces Segment Anything, Its New AI Model for Image Segmentation

In Brief

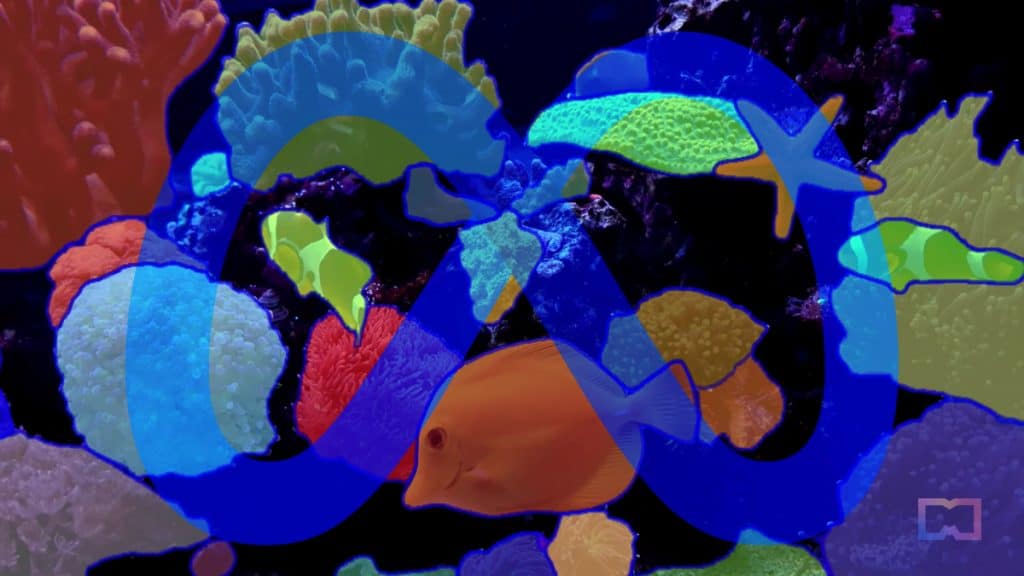

Meta has introduced Segment Anything, its new foundation model for image segmentation.

The company is open-sourcing two large datasets used to train the AI model.

Meta says that Segment Anything could become a component in larger AI systems for understanding both the visual and text content of a webpage.

Meta has introduced Segment Anything, its new foundation model for image segmentation. The process of identifying which image pixels belong to an object is a crucial task in computer vision and is used in a variety of applications, from analyzing scientific imagery to editing photos.

In its introductory blog post, the company set the scene by saying that creating accurate segmentation models for specific tasks in computer vision has typically required specialized work by technical experts with access to AI training infrastructure and large volumes of carefully annotated in-domain data.

However, this may soon change with the Segment Anything project as its new dataset and model are expected to make accurate segmentation models more accessible to a wider audience, eliminating the need for specialized technical expertise and infrastructure. To achieve that, the researchers built a promptable model that is trained on diverse data and can adapt to specific tasks, similar to how prompting is used in natural language processing models or chatbots.

To further democratize segmentation, Meta is making the massive SA-1B dataset available for research purposes, and the Segment Anything Model is available under a permissive open license (Apache 2.0). Additionally, the company has developed a demo that allows users to try SAM with their own images.

Meta sees potential use cases for the SAM in the AI, AR/VR and creator domains. SAM has the potential to become a critical element in larger AI systems that aim to achieve a more general multimodal understanding of the world. For instance, it can facilitate comprehension of both visual and textual content on a webpage.

Additionally, in the AR/VR domain, SAM could enable object selection based on a user’s gaze and allow the object to be “lifted” into 3D. Moreover, content creators can use SAM to enhance creative applications such as extracting image regions for collages or video editing.

Meta has been ramping up its AI efforts amid the generative AI boom and waning interest in the metaverse. Despite the company’s $70 billion bet on the metaverse, its metaverse division Reality Labs saw a loss of US$13.7 billion last year. Recently, Meta also sunset its NFT operations on Facebook and Instagram.

In an interview with Nikkei Asia on Wednesday, Meta CTO Chris Bosworth said that Meta’s top executives have been spending most of their time on AI. Meta CEO Mark Zuckerberg announced in February a new product group focused on generative AI after releasing its new large language model called LLaMA (Large Language Model Meta AI).

The company is expected to debut some ad-creating AI applications this year, Bosworth told Nikkei.

Read more:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Cindy is a journalist at Metaverse Post, covering topics related to web3, NFT, metaverse and AI, with a focus on interviews with Web3 industry players. She has spoken to over 30 C-level execs and counting, bringing their valuable insights to readers. Originally from Singapore, Cindy is now based in Tbilisi, Georgia. She holds a Bachelor's degree in Communications & Media Studies from the University of South Australia and has a decade of experience in journalism and writing. Get in touch with her via cindy@mpost.io with press pitches, announcements and interview opportunities.

More articles

Cindy is a journalist at Metaverse Post, covering topics related to web3, NFT, metaverse and AI, with a focus on interviews with Web3 industry players. She has spoken to over 30 C-level execs and counting, bringing their valuable insights to readers. Originally from Singapore, Cindy is now based in Tbilisi, Georgia. She holds a Bachelor's degree in Communications & Media Studies from the University of South Australia and has a decade of experience in journalism and writing. Get in touch with her via cindy@mpost.io with press pitches, announcements and interview opportunities.