Alibaba Introduces Open-Source Qwen-7B Language Model

Alibaba has unveiled its open-source Large Language Model (LLM) named Qwen-7B, marking their inaugural entry into the realm of publicly accessible LLMs. This model is built upon 7 billion parameters.

For context, Qwen-7B underwent training using 2.2 trillion tokens. The context size set during this training phase was 2048, while users can extend this to a maximum of 8192 during testing. By comparison, Llama-2, another LLM, offers a context size of 4096.

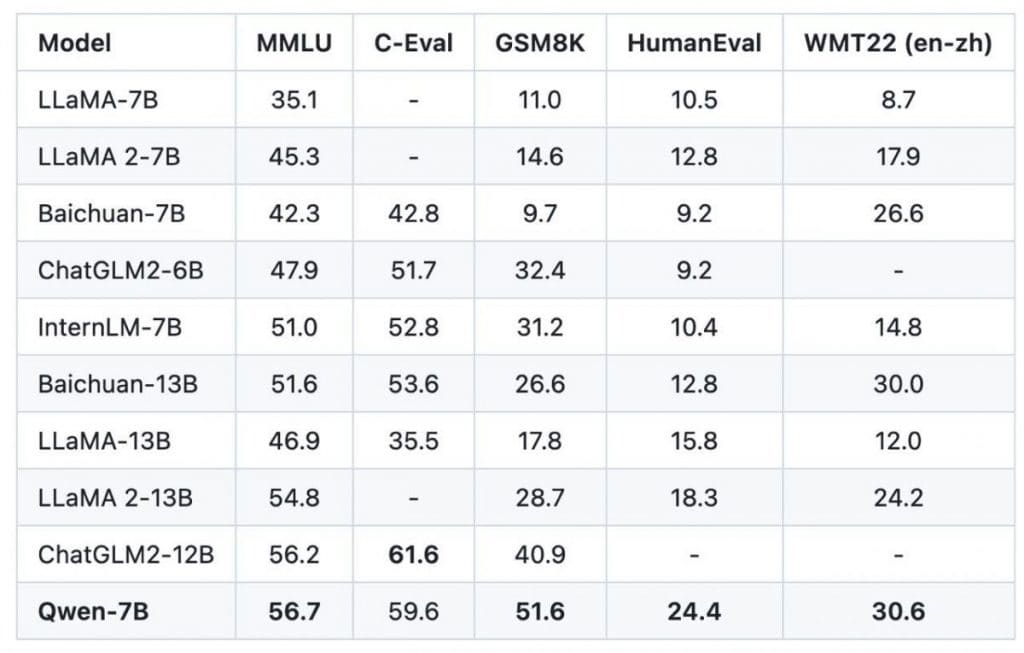

Benchmarks are essential for gauging the performance of such models, and in this domain, the Chinese developers assert that Qwen-7B has surpassed Llama-2. One metric that stands out is the Human-Eval coding benchmark, where Qwen-7B scores 24.4 against Llama-2’s 12.8. However, it’s prudent to view these numbers with a degree of caution. Some benchmarks do indicate that Qwen-7B outperforms not just the base model of LLama-2-7B but also the LLaMA-2-13B variant. However, when pitted against the refined versions of Llama-2, the margin of difference becomes narrower. It should be noted that the exact training methodology of Qwen-7B has not been explicitly detailed by its developers.

In functionality parallel to LLaMa2-chat, Qwen has presented a chat-centric version named Qwen-7B-Chat. This model is optimized to interact with users and incorporates various tools and APIs to enhance its responsiveness.

Those with an inclination towards technical specifics would be interested to know that Qwen-7B’s architectural foundation bears resemblance to LLaMA. However, there are distinct features that differentiate Qwen-7B:

- It employs untied embedding.

- Rotary positional embedding is utilized.

- Biases are excluded, with the exception of QKV in attention.

- RMSNorm is favored over LayerNorm.

- Instead of the standard ReLU, SwiGLU is incorporated.

- Flash attention has been introduced to expedite the training process.

- The model comprises 32 layers, has an embedding dimension of 4096, and accommodates 32 attention heads.

In terms of licensing, Qwen-7B aligns with Llama-2. It permits commercial usage, but with a stipulation on user volume. While Llama-2 sets this cap at 700 million active users per month, Qwen-7B’s threshold is 100 million.

Those seeking an in-depth examination can refer to the technical report available on GitHub. Additionally, a demonstration of Qwen-7B, provided in the Chinese language, is accessible for those interested in a practical exploration of the model’s capabilities.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.