Würstchen V2 Model Wins Over Stable Diffusion XL with Impressive Speed for Generating High-Resolution Images

A recent tweet by the author of an article titled “Würstchen” (German for “Sausage”) has captured the attention of enthusiasts and experts alike. The tweet shared the intriguing results of generating images using the new Würstchen V2 model.

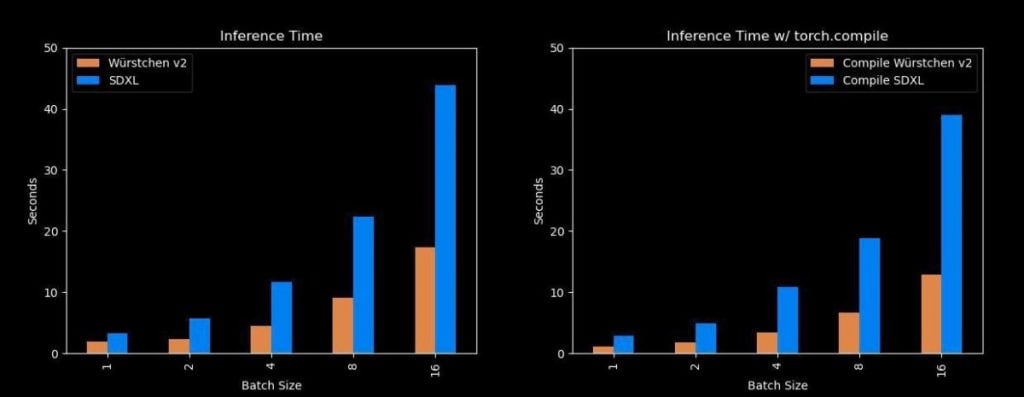

Würstchen is fast and efficient, generating images faster than models like Stable Diffusion XL while using less memory. It also has reduced training costs, with Würstchen v1 requiring only 9,000 GPU hours of training at 512×512 resolutions, compared to 150,000 GPU hours spent on Stable Diffusion 1.4. This 16x reduction in cost not only benefits researchers conducting new experiments but also opens the door for more organizations to train such models. Würstchen v2 used 24,602 GPU hours, making it 6x cheaper than SD1.4, which was only trained at 512×512.

Würstchen V2 is a diffusion model that works in a highly compressed latent space of images, reducing computational costs for training and inference by orders of magnitude. It employs a novel design that achieves a 42x spatial compression, a feat not previously seen. Würstchen employs a two-stage compression, Stage A and Stage B, which decode compressed images back into pixel space. A third model, Stage C, is learned in the highly compressed latent space, requiring fractions of the compute used for current top-performing models while allowing cheaper and faster inference.

Würstchen V2 comprises two diffusion stages:

- Stage A: This stage involves text-conditioned diffusion and boasts a staggering 1 billion parameters. The acceleration here is achieved through ultra-high compression techniques. Notably, instead of the hidden code size of 128x128x4, as seen in SDXL, Würstchen V2 initially operates at a resolution of 24x24x16. This means fewer pixels but more channels, resulting in a significant speed boost.

- Stage B: This is a diffusion model equipped with 600 million parameters, responsible for decompressing the image from 24×24 to a resolution of 128×128.

Completing the process is a decoder with 20 million parameters that transforms the hidden code into a rendered image.

The practical benefit that immediately stands out is the remarkable speed of Würstchen V2. It operates at a velocity that’s 2-2.5 times faster than SDXL, a noteworthy advancement in the field of AI image generation.

As with any technological innovation, there may be trade-offs. In terms of image quality, some experts suggest a slight loss, although a comprehensive and honest comparison is still awaited to provide concrete evidence.

Genreated text-to-image examples are below:

Read more related topics:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.