LLMs Have Advanced Cognitive Abilities, According to a University of California Study

In Brief

Experimental research by the Computational Vision and Learning Laboratory at the University of California has shown that large language models (LLMs) can think like people and not imitate our thinking based on statistics.

LLMs have the ability to reason from scratch and abstract pattern induction, which is the basis of human intelligence.

This discovery ends the debate about whether LLMs “think” like humans or only mimic human thinking.

A pioneering study conducted by the Computational Vision and Learning Laboratory, housed within the University of California, has cast light on the true capabilities of LLMs. Under the meticulous leadership of Professor Hongjing Lu, this research venture has garnered attention for its authoritative findings, as published in Nature Human Behavior’s recent issue under the title “Emergent Reasoning by Analogy in Large Language Models.“

Empowering LLMs

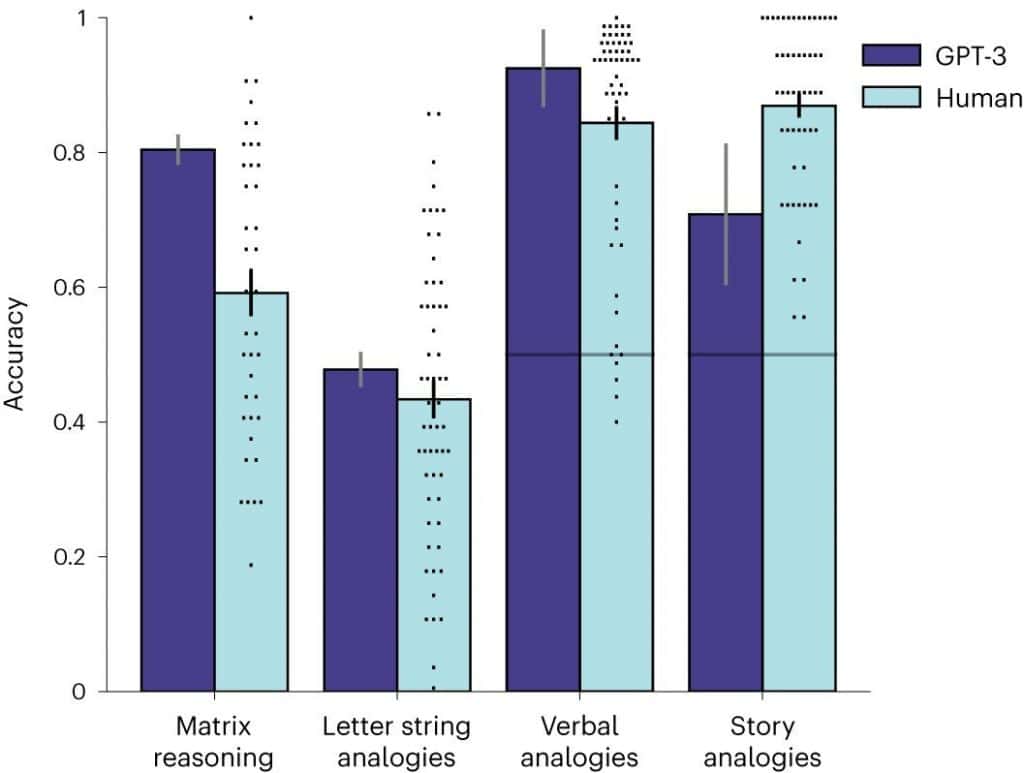

The crux of this revelatory discovery hinges on the empirical confirmation that LLMs at the caliber of GPT-3 and beyond have not only achieved parity with human problem-solving capabilities but have, in certain aspects, outpaced them. Notably, these capabilities extend to domains where LLMs are faced with:

- Novel challenges previously unencountered.

- The demand for unfettered reasoning devoid of direct training cues.

- The necessity to abstract pattern inductions, epitomizing the ability to analogize.

Unveiling Analogical Reasoning

At the heart of human intelligence resides the quintessential capability of reasoning by analogy, a process that transcends mere mimicry. This innate ability to abstract, essential to human cognition, has long set human intelligence apart. It is within this context that LLMs’ demonstration of analogical reasoning takes on paramount significance. This accomplishment stands as a critical milestone toward achieving AGI, as it underscores a facet indispensable for AGI’s realization.

Resolving Lingering Debate

The study’s findings lay to rest longstanding debates that have pervaded the field of AI and AGI development:

- The nature of LLMs’ cognition – whether it mirrors human cognitive processes or merely emulates them.

- The extent to which LLMs’ “thinking” transcends statistical mimicry and embodies genuine cognitive engagement.

Key Implications

The implications of this research reverberate across multiple dimensions:

- Cognitive Equivalence: Empirical evidence substantiates that LLMs indeed possess cognitive processes akin to human mental faculties.

- Comprehending Computational Processes: While the intricate mechanics of LLMs’ emergent relational representations warrant further exploration, it is evident that their computational foundation diverges markedly from biological intelligence.

Elevating LLMs

Moreover, this study signifies a pivotal juncture by delineating three quintessential elements that would propel LLMs from humanoid semblances to intellectual parity with humans:

I. Autonomous Goals and Motivation: Ingraining LLMs with intrinsic motivations and objectives.

II. Long-Term Memory: Equipping LLMs with enduring memory capacities.

III. Multimodal Sensory Understanding: Instilling LLMs with a physical comprehension of the world, rooted in multifaceted sensory experiences.

The dynamic interplay between computational intelligence and human cognitive uniqueness is poised to redefine the trajectory of AGI development, with tangible implications for diverse sectors ranging from technology to scientific inquiry.

The Telegram channel provided assistance for the article’s creation.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.