Text-to-Video Model Gen-2 Can Generate Short Videos Using Text Prompts

In Brief

The new Text2Video model Gen-2 from RunWayML can not only edit existing videos but also generate new ones from scratch, using only a text prompt.

This feature is expected to improve the way people create and share content on social media platforms, allowing users to transform static images into dynamic and engaging video clips without any prior knowledge of video editing.

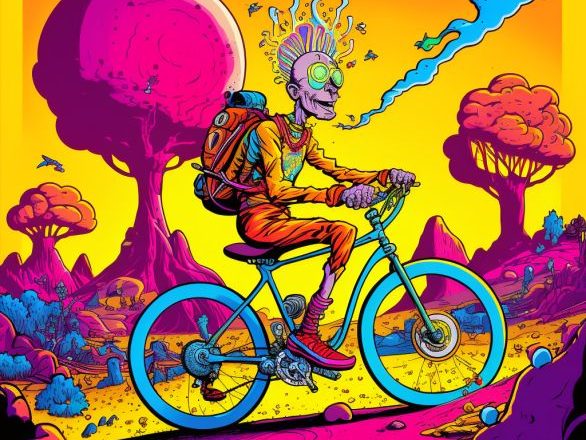

The new text-to-video model Gen-2 from Runway can not only edit existing videos but also generate new ones from scratch, using only a text prompt. This is a significant improvement over the previous version of Gen, which could only edit existing videos. The text-to-video model Gen-2 utilizes Runway’s cutting-edge AI technology to create videos that are indistinguishable from those made by humans. With this new feature, users can save time and effort by generating videos automatically without the need for extensive video editing skills.

The new Gen-2 model is also able to turn an uploaded image into a short video clip. This is done by providing a text hint describing what the user wants the video to be. So far, the duration of the videos does not exceed a few seconds, but the quality is already much higher than that of competitors (especially since competitors’ neural networks are not publicly available). This feature is expected to improve how people create and share content on social media platforms. With the Gen-2 model, users can easily transform their static images into dynamic video clips. There is a good chance that Facebook or Tiktok will use this technology to expand the set of AI tools available to content creators.

the AI video race is wild. Just a week has passed since the launch of Gen-1, and Gen-2 is already out. The competition among tech companies to develop the most advanced AI video technology is rapidly increasing, with each company striving to outdo the other.

To try the new version of Gen, you will have to sign up in the queue on the Runway website.

- Runway, an artificial intelligence startup, announced Gen-1, a neural network that can turn old videos into new ones by combining prompts and images. Gen-1 enables filmmakers to quickly produce content in a cost-effective manner by applying the composition and style of an image or text prompt to the structure of the source video. Runway Research is dedicated to building multimodal AI systems that enable new forms of creativity, and Gen-1 can be used to experiment with the future of storytelling.

- Last month, Sam Altman, co-founder and CEO of OpenAI, recently spoke to TechCrunch and said that OpenAI is developing an AI model for videos and GPT-4, which will be available to the public after the company confirms its trustworthiness and security.

- In October, Google developed Imagen Video, a method for creating text-conditional videos based on a series of video diffusion models. The system accepts a textual description and generates a 16-frame movie at three frames per second with a resolution of 24 by 48 pixels. The system scales and “predicts” the extra frames, creating a final video with 128 frames at 24 frames per second and 720p resolution (1280×768). Sixty million image-text pairs and 14 million video-text pairs were used to train Imagen Video.

Read more related articles:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.