Text-to-Video AI Model

What is Text-to-Video AI Model?

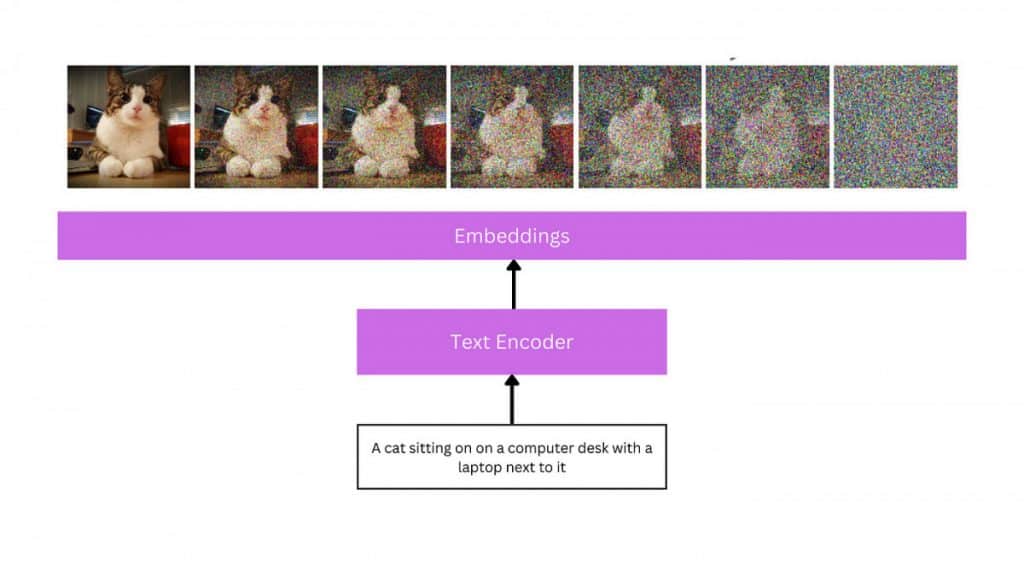

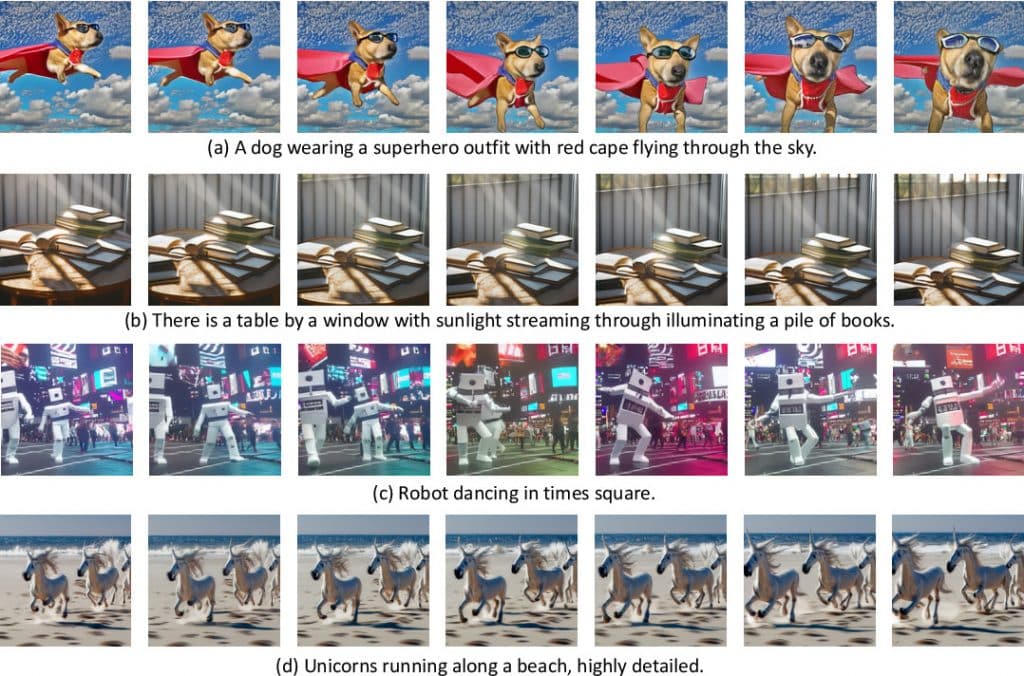

Natural language prompts are the input used by text-to-video models to create videos. These models comprehend the context and semantics of the input text and then produce a corresponding video sequence using sophisticated machine learning, deep learning, or recurrent neural network approaches. Text-to-video is a rapidly developing area that requires enormous quantities of data and processing power to train. They might be used to help with the filmmaking process or to produce entertaining or promotional videos.

Understanding of Text-to-Video AI Model

Similar to the text-to-image problem, text-to-video production has only been studied for a few years at this time. Earlier studies mostly generated frames with captions auto-regressively using GAN and VAE-based techniques. These studies are restricted to low resolution, short range, and unique, isolated movements, even though they laid the groundwork for a novel computer vision problem.

The following wave of text-to-video generation research used transformer structures, drawn by the success of large-scale pretrained transformer models in text (GPT-3) and picture (DALL-E). While works like TATS present hybrid approaches that include VQGAN for picture creation with a time-sensitive transformer module for sequential frame generation, Phenaki, Make-A-Video, NUWA, VideoGPT, and CogVideo all propose transformer-based frameworks. Phenaki, one of the works in this second wave, is especially intriguing since it allows one to create arbitrarily lengthy films based on a series of prompts, or a narrative. Similarly, NUWA-Infinity allows the creation of extended, high-definition films by proposing an autoregressive over autoregressive generation technique for endless picture and video synthesis from text inputs. However, the NUWA and Phenaki models are not accessible to the general public.

The majority of text-to-video models in the third and current wave include diffusion-based topologies. Diffusion models have shown impressive results in generating rich, hyper-realistic, and varied images. This has sparked interest in applying diffusion models to other domains, including audio, 3D, and, more recently, video. Video Diffusion Models (VDM), which expand diffusion models into the video domain, and MagicVideo, which suggests a framework for producing video clips in a low-dimensional latent space and claims significant efficiency benefits over VDM, are the forerunners of this generation of models. Another noteworthy example is Tune-a-Video, which allows one text-video pair to be used to fine-tune a pretrained text-to-image model and allows one to change the video content while maintaining motion.

Future of Text-to-Video AI Model

Hollywood’s text-to-video and artificial intelligence (AI) future is full with opportunities and difficulties. We may anticipate much more complex and lifelike AI-generated videos as these generative AI systems develop and become more proficient at producing videos from text prompts. The possibilities offered by programs like Runway’s Gen2, NVIDIA’s NeRF, and Google’s Transframer are only the tip of the iceberg. More complex emotional expressions, real-time video editing, and even the capacity to create full-length feature films from a text prompt are possible future developments. For example, storyboard visualization during pre-production might be accomplished with text-to-video technology, giving directors access to an unfinished version of a scene before it is shot. This might result in resource and time savings, improving the efficiency of the filmmaking process. These tools may also be used to quickly and affordably produce high-quality video material for marketing and promotional reasons. They can also be used to create captivating videos.

Latest News about Text-to-Video AI Model

- Zeroscope, a free and open-source text-to-video technology, is a competitor to Runway ML’s Gen-2. It aims to transform written words into dynamic visuals, offering higher resolution and a closer 16:9 aspect ratio. Available in two versions, Zeroscope_v2 567w and Zeroscope_v2 XL, it requires 7.9 GB of VRam and introduces offset noise to enhance data distribution. Zeroscope is a viable open-source alternative to Runway’s Gen-2, offering a more diverse range of realistic videos.

- VideoDirectorGPT is an innovative approach to text-to-video generation, combining Large Language Models (LLMs) with video scheduling to create precise and consistent multi-scene videos. It uses LLMs as a storytelling master, crafting scene-level text descriptions, object lists, and frame-by-frame layouts. Layout2Vid, a video generation module, provides spatial control over object layouts. Yandex’s Masterpiece and Runway’s Gen-2 models offer accessibility and simplicity, while also improving content creation and sharing on social media platforms.

- Yandex has introduced a new feature called Masterpiece, which allows users to create short videos lasting up to 4 seconds with a frame rate of 24 frames per second. The technology uses the cascaded diffusion method to craft subsequent video frames, allowing users to generate a wide array of content. The Masterpiece platform complements existing capabilities, including image creation and text posts. The neural network generates videos through text-based descriptions, frame selection, and automated generation. The feature has gained popularity and is currently available exclusively to active users.

Latest Social Posts about Text-to-Video AI Model

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Viktoriia is a marketing researcher and copywriter with a background in international relations. Her professional portfolio includes the writing of research papers focused on the import and export of products to Europe and Asia. Proficiency in the Chinese language and the time she has spent in China have extended her capabilities to master not only European markets but also those in China and Singapore. While currently living in Italy, Viktoriia continues to deepen her knowledge and skills in marketing and copywriting. Her experience allows her to perform analytical work and create texts on a diverse range of topics, ensuring accessibility to a broad audience.

More articles

Viktoriia is a marketing researcher and copywriter with a background in international relations. Her professional portfolio includes the writing of research papers focused on the import and export of products to Europe and Asia. Proficiency in the Chinese language and the time she has spent in China have extended her capabilities to master not only European markets but also those in China and Singapore. While currently living in Italy, Viktoriia continues to deepen her knowledge and skills in marketing and copywriting. Her experience allows her to perform analytical work and create texts on a diverse range of topics, ensuring accessibility to a broad audience.