Easy-to-Hard Generalization

What is Easy-to-Hard Generalization?

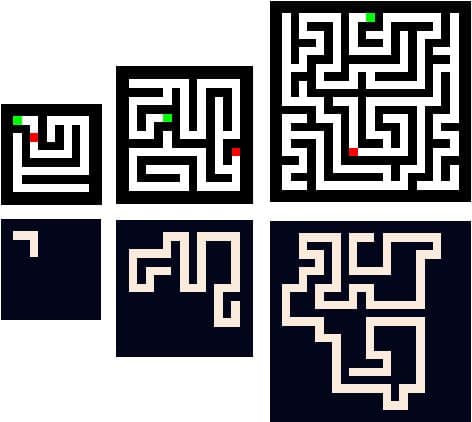

Easy-to-Hard Generalization refers to the process of evaluating the performance of algorithms on tasks that vary in complexity, from simple and manageable ones to more challenging ones. In the context of AI development, this approach helps ensure that models are not only effective at handling straightforward tasks but also capable of scaling their behavior when faced with more complex challenges.

Understanding Easy-to-Hard Generalization

For instance, consider the scenario where a model is tested on the task of identifying bugs in a small piece of code.

For example, in machine learning, easy-to-hard generalization can involve training a model on a dataset that starts with simple or well-separated examples and gradually introduces more complex or overlapping examples. This approach aims to enhance the model’s ability to handle challenging scenarios and improve its overall performance on unseen data.

In perceptual learning, easy-to-hard generalization can involve training individuals on perceptual tasks that start with easily distinguishable stimuli and gradually introduce more difficult or ambiguous stimuli. This process helps individuals develop better discrimination abilities and generalize their learning to a wider range of stimuli.

Overall, easy-to-hard generalization is a strategy used to enhance learning, improve performance, and promote better generalization capabilities by gradually increasing the difficulty or complexity of examples or tasks.

Latest News about Easy-to-Hard Generalization

- Researchers from University College London have introduced the Spawrious dataset, an image classification benchmark suite, to address spurious correlations in AI models. The dataset, consisting of 152,000 high-quality images, includes both one-to-one and many-to-many spurious correlations. The team found that the dataset demonstrated incredible performance, revealing the weaknesses of current models due to their reliance on fictitious backgrounds. The dataset also highlighted the need to capture the intricate relationships and interdependencies in M2M spurious correlations.

- The new AI, known as a Differential Neural Computer (DNC), relies on a high-throughput external memory device to store previously learned models and generate new neural networks based on archived models. This new form of generalized learning could pave the way for an era of AI that will strain the human imagination.

- A recent paper by MIT found that GPT-4, a language model (LLM) that scored 100% on MIT’s curriculum, had incomplete questions and biased evaluation methods, resulting in significantly lower accuracy. The Allen Institute for AI’s “Faith and Fate: Limits of Transformers on Compositionality” paper discusses the limitations of transformer-based models, focusing on compositional problems that require multi-step reasoning. The study found that transformer models show a drop in performance as task complexity increases, and fine-tuning with task-specific data improves performance within the trained domain but fails to generalize to unseen examples. The authors suggest that transformers should be replaced due to their limitations in performing complex compositional reasoning, reliance on patterns, memorisation, and single-step operations.

Latest Social Posts about Easy-to-Hard Generalization

FAQs

Easy-to-Hard Generalization refers to the process of training or learning models, algorithms, or systems by gradually increasing the difficulty or complexity of the examples or tasks. The idea behind easy-to-hard generalization is to start with simpler or easier examples and gradually introduce more challenging or difficult ones to improve the model’s ability to generalize and perform well on a wide range of inputs.

« Back to Glossary IndexDisclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.