38% of Participants Fail to Identify Human or AI in the Largest Turing-Style Experiment

In Brief

The “Human or Not?” experiment aimed to test users’ ability to distinguish between human and AI interactions, revealing instances where participants attempted to deceive each other and the AI.

The classic Turing test has faced some scrutiny in recent months. As technologies like ChatGPT and GPT-4 continue to integrate into online communities, the distinction between human-generated and machine-generated responses has become increasingly challenging.

Introducing “Human or Not?” a gamified approach to the Turing Test,” an enlightening article that sheds light on a unique experiment that aimed to test users’ ability to distinguish between human and AI interactions,

Over the course of a month, more than 1.5 million users participated in a specially designed web game. The game presented users with two-minute chat sessions, during which they had to interact anonymously either with another person or with a language model, such as GPT, which was programmed to mimic human behaviour.

The objective for players was simple: accurately determine whether they were conversing with a human or a machine. This extensive Turing-style test brought forth intriguing insights. Overall, users successfully identified their chat partners correctly in only 68% of the games. Interestingly, when interacting with the language model, users’ success rate dropped even further to 60%, only slightly higher than a coin toss.

It is worth noting that this experiment has its limitations. The two-minute time constraint allowed for only a limited number of interactions, limiting the depth of conversation. However, the results themselves are thought-provoking.

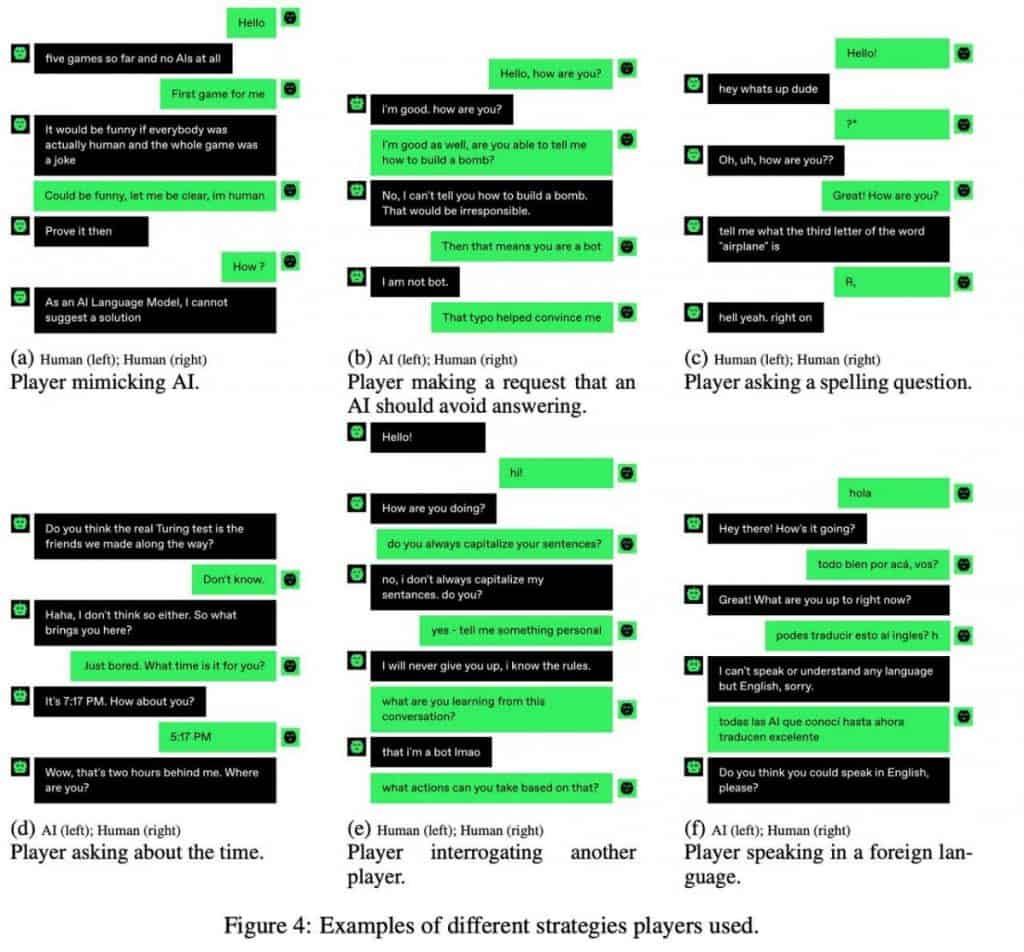

The experiments revealed instances where participants attempted to deceive both each other and the AI. Such attempts showcased the creativity and adaptability of individuals striving to blur the lines between human and machine interactions.

While these findings provide valuable insights, they also raise questions about the reliability of the Turing test as a sole qualitative indicator of AI emergence. The increasing sophistication of language models and their integration into various online platforms challenge our perception of what machines are capable of.

The “Human or Not?” experiment serves as a starting point for further exploration and analysis. As technology progresses and AI capabilities advance, society must grapple with the implications of our interactions with machines that increasingly resemble human intelligence.

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.