DALL-E 3 Release Amplifies OpenAI’s Influence, Leaving Midjourney and Stable Diffusion Behind

In Brief

DALL-E 3 is set to be seamlessly integrated with GPT-4, specifically tailored for ChatGPT+ subscribers.

DALL-E 3 refrains from recreating images of public figures when their names are explicitly mentioned.

The timeline for access to DALL-E 3 is set for October.

OpenAI has unveiled its latest creation: DALL-E 3. Unlike its predecessors, DALL-E 3 focuses on refining the minutiae, addressing issues like lettering and intricate body details, such as fingers. The result? An array of aesthetically pleasing images without the need for complex prompts or workarounds.

It’s important to note that this release doesn’t come with a comprehensive set of implementation details, articles, or APIs. Instead, DALL-E 3 is set to be seamlessly integrated with GPT-4, specifically tailored for ChatGPT+ subscribers.

This development may not be a seismic shift in the AI landscape, but rather a step forward in collaboration between models. Many anticipate that the next Stable Diffusion model will offer even greater sophistication and artistic appeal.

To put it in context, OpenAI’s journey through AI image generation has been quite a ride:

- 2021: DALL-E 1, a 12-billion parameter model, was introduced with limited information.

- 2021: GLIDE, a 2-billion parameter model, was unveiled along with open-source 300-million parameter models.

- 2022: DALL-E 2 arrived, sporting 2 billion parameters, accompanied by an unCLIP paper and API.

- 2023: DALL-E 3 has made its entrance, and while the details might be somewhat cryptic, one thing is clear—it will integrate with GPT-4 for ChatGPT+ subscribers.

As of now, visuals of DALL-E 3 remain somewhat scarce. There’s no codebase, blog post, or detailed comparison with the state-of-the-art (SOTA). OpenAI appears to be keeping their cards close to their chest.

The model is touted to possess a deeper understanding of nuances and details compared to its predecessors. This means translating your creative concepts into highly precise images is expected to be a smoother process.

One intriguing promise of DALL-E 3 is its integration with ChatGPT. This implies that users won’t need to grapple with crafting intricate prompts; a brief description should suffice, with ChatGPT adeptly generating detailed prompts on your behalf.

OpenAI has also emphasized the importance of context in lengthy prompts. DALL-E 3 is designed to embrace verbosity, making it more attuned to the context described in extensive prompts.

Yet, as with any new AI model, there’s an element of the unknown. While initial glimpses look promising, the true litmus test will come with extended usage. Questions linger about its efficiency and speed of operation.

It’s likely that DALL-E 3 will be a multi-stage diffusion process, with GPT-4 serving as the text encoder. The intricate mechanics of this setup may remain shrouded in secrecy.

The timeline for access to DALL-E 3 is set for October, initially for ChatGPT Plus and ChatGPT Enterprise users, with a possibility of broader access for researchers thereafter.

Nuances and Censorship of DALL-E 3

The primary focal points of DALL-E 3’s development was the meticulous process of curbing its capabilities. This involved stringent alignment and filters designed to exclude specific types of content. For instance, the model adamantly refuses to generate images of famous personalities, replicate artworks in the style of renowned artists, or create any content deemed unsafe by OpenAI’s discerning standards. This strategic approach isn’t just about limitations; it’s a proactive measure aimed at shielding the company from potential legal entanglements.

Yet, beyond these filters and alignments, some intriguing observations come to light. DALL-E 3 appears to exhibit a certain weakness when it comes to generating photorealistic content. Instead of producing images that mimic real photographs flawlessly, the output carries a distinct stylized quality. These AI-crafted pictures exude an almost rendered and slightly plastic appearance. Even when explicitly prompted with the word “photograph,” the result remains entrenched in its characteristic stylization.

It’s worth noting that despite these idiosyncrasies, DALL-E 3 does offer a glimpse of remarkable potential. Among its creations, some instances exhibit a striking resemblance to photographs. To bear in mind that the simulated realism of these images doesn’t necessarily align with how a genuine photograph of the same subject would appear, especially if submerged underwater.

DALL-E 3 Features and Details

Let’s take a moment to sift through the pixels and read between the lines to understand what this new model truly offers.

The Art of Stylization: Glancing through OpenAI’s Instagram account, you’ll notice an abundance of artwork characterized by exquisite stylization. While there’s an impressive array of abstract compositions and designs, the model appears to steer clear of producing photorealistic content. The emphasis here is on aesthetics and creativity, not mimicking reality.

Artistic Constraints: DALL-E 3 takes a different path from its predecessor. It adamantly refuses to create images in the style of living artists, a stark departure from DALL-E 2, which could imitate certain artists’ styles. This might raise eyebrows in the creative community, similar to the lukewarm reception of Stable Diffusion 2.0.

Empowering Artists: In a move to respect artists’ rights, OpenAI allows artists to exclude their work from future DALL-E versions. By submitting an image they own the rights to, artists can request its exclusion from the model’s output. Future iterations of DALL-E will then avoid generating content resembling the artist’s style.

Security and Censorship: OpenAI’s paranoia about security is palpable. They’ve collaborated with external “red teams” to test the model’s security and employed input classifiers to teach the model to ignore specific words that could lead to explicit or harmful content. DALL-E 3 refrains from recreating images of public figures when their names are explicitly mentioned. Whether celebrities fall under this category remains uncertain, potentially impacting the quality of generated faces.

Watermarks and Tracking: There’s a hint at the embedding of tags to track “AI-generated images,” indicating a move toward better monitoring and potentially watermarking generated content.

Text and Hands Improved: OpenAI touts improved text generation and hand rendering, a common claim among competitors. The real test lies in the actual output beyond cherry-picked examples.

Spatial Comprehension: DALL-E 3 excels in understanding spatial relationships described in prompts. This enhances the model’s ability to construct complex angles and compositions, though users await more concrete evidence of this promise.

The Power of Prompts: The crux of DALL-E 3 lies in its prompt capabilities and integration with ChatGPT. It promises automation, speed, and simplification of prompt design. The trend here is toward chatGPT generating prompts, translating vague ideas or rudimentary prompts into eloquent ones. DALL-E 3’s improved contextual understanding streamlines the process, allowing users to focus on intent over verbosity.

Uncharted Territories: Notably absent from the discussion are aspects like inpainting, outpainting, generative fill, and 3D modeling. The absence of these features could be a limitation, especially for users accustomed to more versatile models.

Access Details: DALL-E 3 is set to become available to ChatGPT Plus and Enterprise customers in early October. However, the specifics regarding the allocation of credits for ChatGPT Plus users and the associated costs remain unclear. Access will be provided via the API and the OpenAI Labs platform “later in the fall.”

Integration Prowess: DALL-E is set to be seamlessly integrated into partner and Microsoft products. Expect to witness the generation of presentations, illustrations, designs, logos, all in context and amplified with assistance from ChatGPT. This integration is set to become mainstream, posing a significant challenge to competitors like Google with its Bard and Ideogram.

The Convergence of LLM and Visual Content: The most intriguing aspect lies in the convergence of Large Language Models (LLMs) and visual content generation models. It signifies a shift from complex prompt engineering to expressing ideas in a more accessible language. The AI will glean context and ideas from these expressions, offering creative possibilities that are hard to resist.

DALL-E 3: Be a New Leader in the AI Image Generation

OpenAI’s decision to integrate DALL-E 3 into the ChatGPT ecosystem is a strategic move. This integration grants DALL-E 3 access to a vast user database of 100 million active users. This step significantly enhances DALL-E 3’s accessibility and has the potential to catapult its popularity.

Currently, Midjourney and Stable Diffusion boast around 15 million registered users. However, with this integration, DALL-E 3 is set to gain access to a user base ten times larger—100 million users. This makes the ChatGPT Plus subscription plan all the more appealing, as it offers access to a chatbot, analytical tools, and image generation, all at an affordable price point.

The integration is not only advantageous for existing users but also serves as a powerful magnet for new users. It expands the OpenAI ecosystem’s reach and popularity, drawing in individuals who seek AI-generated content solutions.

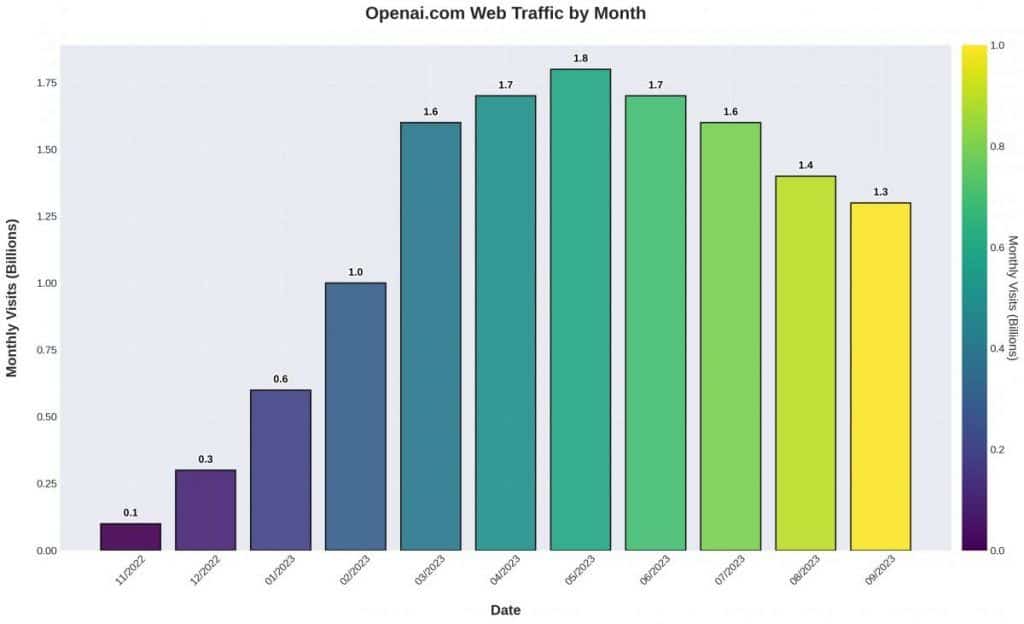

This strategic move is poised to boost OpenAI’s revenue and other key metrics. The company’s investors will likely view this development favorably, especially in light of a recent 20% decline in traffic volume during the summer.

Read more related topics:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.