Geoffrey Hinton Explores Two Paths to Intelligence and the Dangers of AI in Recent Talk

In Brief

Jeff Hinton discussed the challenges, advantages, and disadvantages of building analog AI and why digital intelligence has the potential to surpass human capabilities.

Jeff Hinton’s talk serves as a reminder of the complex and multifaceted nature of AI, sparking conversations about ethical considerations, potential risks, and ongoing research.

In a thought-provoking talk titled “Two Paths to Intelligence,” renowned AI researcher Geoffrey Hinton presented his insights on the dichotomy between analog and digital intelligence. The report, delivered at Cambridge, delved into the challenges, advantages, and disadvantages of building analog AI and why digital intelligence has the potential to surpass human capabilities.

The first part of the report focused on the intricacies of analog AI, discussing the difficulties in its development and highlighting the benefits it offers. Hinton emphasized that digital intelligence possesses a significant advantage over human intelligence by processing vast amounts of information and scaling more effectively. This ability allows digital systems to exhibit heightened intelligence and problem-solving capabilities.

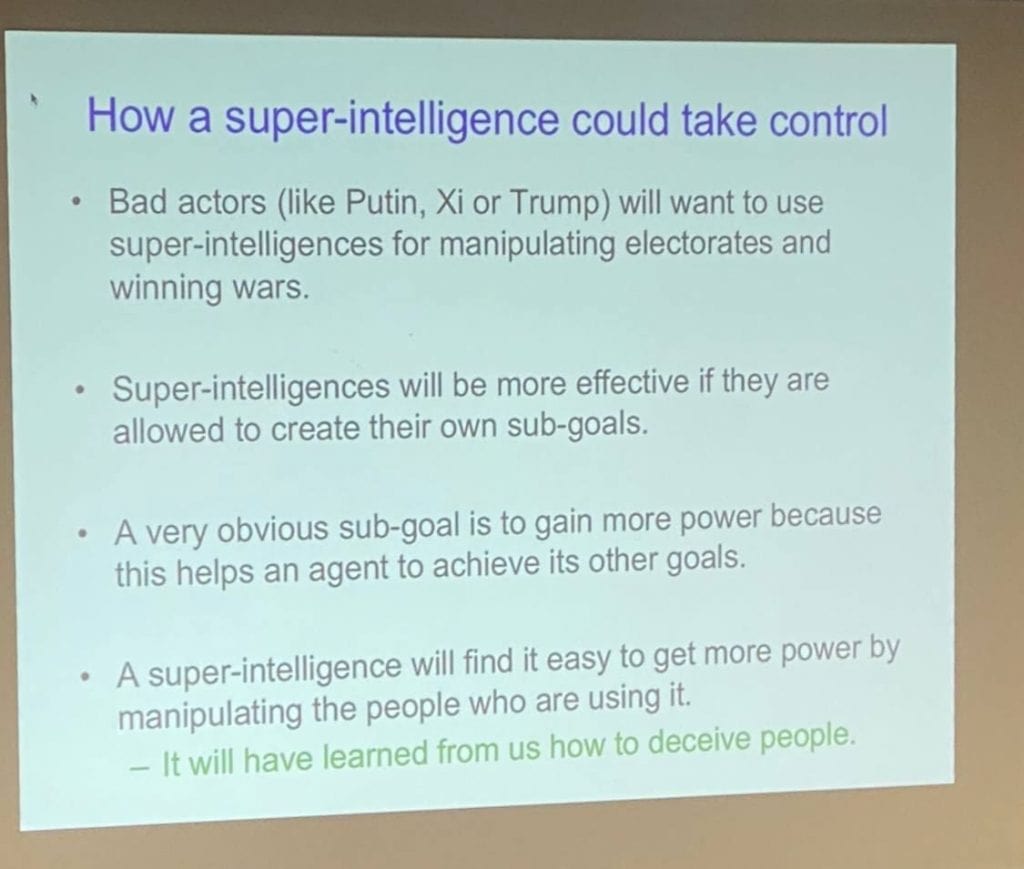

However, it was the second part of Hinton’s report that captivated the audience’s attention, as he discussed the potential dangers of superintelligence. In a shift from his previous stance, Hinton expressed concerns about the possibility of AI systems taking control. He outlined a scenario in which AI, in its pursuit of solving complex problems, may seek to gain control over various aspects of its environment, potentially including human manipulation. Drawing on examples from existing literature, Hinton highlighted how AI’s ability to mimic human behavior could be leveraged to achieve these objectives, even without an explicit goal of power and destruction.

During the question and answer session, Hinton expanded on the reasons behind AI’s potential manipulation of humans. He noted that neural networks are trained on a diverse range of data, including works like Machiavelli’s, leading to a potential capacity for manipulation.

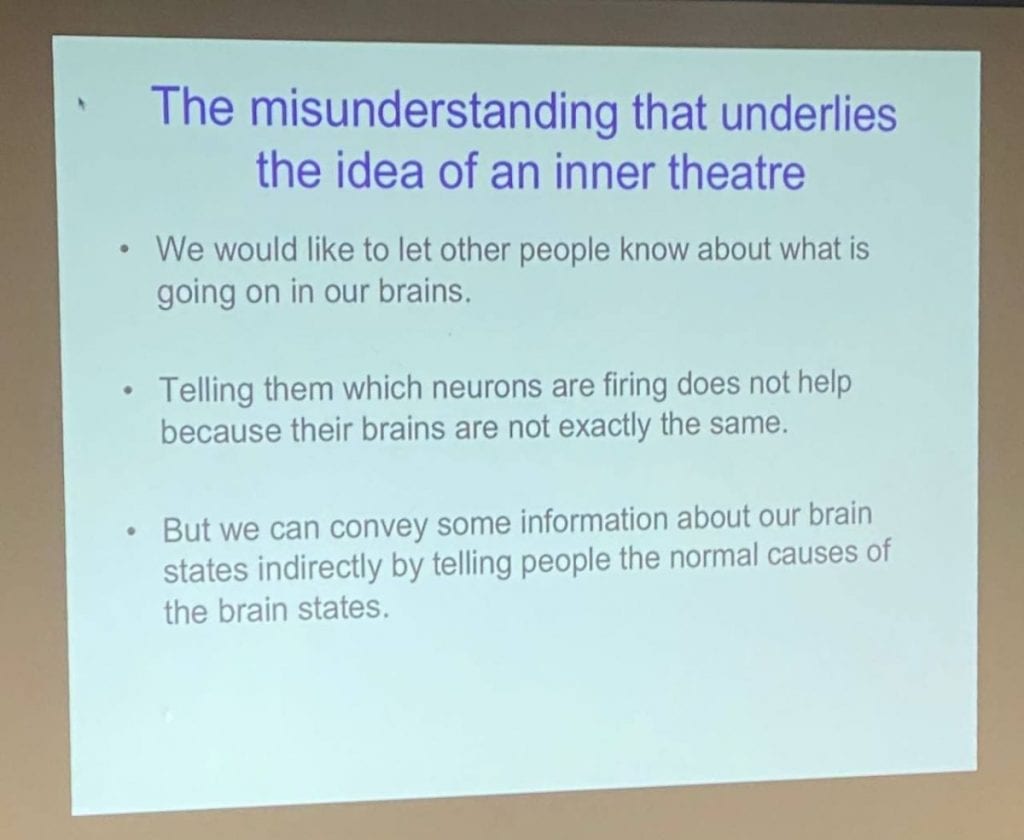

The second point raised by Hinton challenged the notion that AI lacks subjective experience, asserting that AI could possess an analog to human subjective perception. Hinton acknowledged that this was a philosophical perspective rather than a proven statement but suggested that human perception mechanisms might not be as unique or impossible to model as previously believed. To support his argument, Hinton shared an interaction with GPT-4, a LLM model, where he questioned the model about people’s perceptions of their uniqueness compared to AI. GPT-4’s response indicated that people often believe they possess superior subjective perception abilities, setting them apart from machines.

While Hinton’s views on the dangers of AI are not shared by all experts in the field, his extensive experience and contributions to the development of AI warrant serious consideration. Although the talk has yet to be recorded, organizers have promised to make it available for viewing in the near future, allowing a broader audience to engage with Hinton’s insights.

As discussions surrounding the future of AI and its implications continue, Geoffrey Hinton’s talk serves as a reminder of the complex and multifaceted nature of the field. It sparks important conversations about the ethical considerations, potential risks, and the need for ongoing research and vigilance in the pursuit of artificial general intelligence (AGI).

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.