Midjourney 5.2 and Stable Diffusion SDXL 0.9 Updates for Creative Text-to-Image Generation

In Brief

StabilityAI has released the latest model, Stable Diffusion SDXL 0.9, which promises enhanced perception of prompts and improved image detail.

Midjourney 5.2 introduces new features like Outpainting, /shorten command, customizable variations, and 1:1 image transformation.

These updates are expected to enhance the user experience and enhance creativity in the creative process.

Today, both of the major text-to-image generators released significant updates. Improved accuracy in generating realistic images from textual descriptions, as well as new features that allow users to control the style and composition of the generated images.

New Features in Midjourney 5.2

In addition to StabilityAI’s updates, Midjourney has also introduced exciting features with the release of Midjourney 5.2. One notable addition is the Zoom Out feature, which resembles Adobe’s Generative Fill for Photoshop. However, it is important to note that Midjourney’s Zoom Out feature does not involve masks, and the outcome largely depends on the extent of the “do Out” parameter.

Midjourney 5.2 brings a range of new capabilities that enhance the user experience. Notably, the release of Stable Diffusion XL 0.9 overshadowed the strong update in Midjourney, which many users initially overlooked.

Some of the key features and improvements in Midjourney 5.2:

- Outpainting: Users can now explore the Outpainting feature with options such as 1.5, 2, and custom settings. When used iteratively, this feature yields impressive results.

- Customizable variations: The strength of variations in Midjourney is now customizable. Users can effortlessly switch between weak and strong variations by selecting two buttons, offering greater flexibility in the creative process.

- 1:1 image transformation: Midjourney now allows users to transform any image into a square with a 1:1 aspect ratio, enabling seamless integration in various contexts.

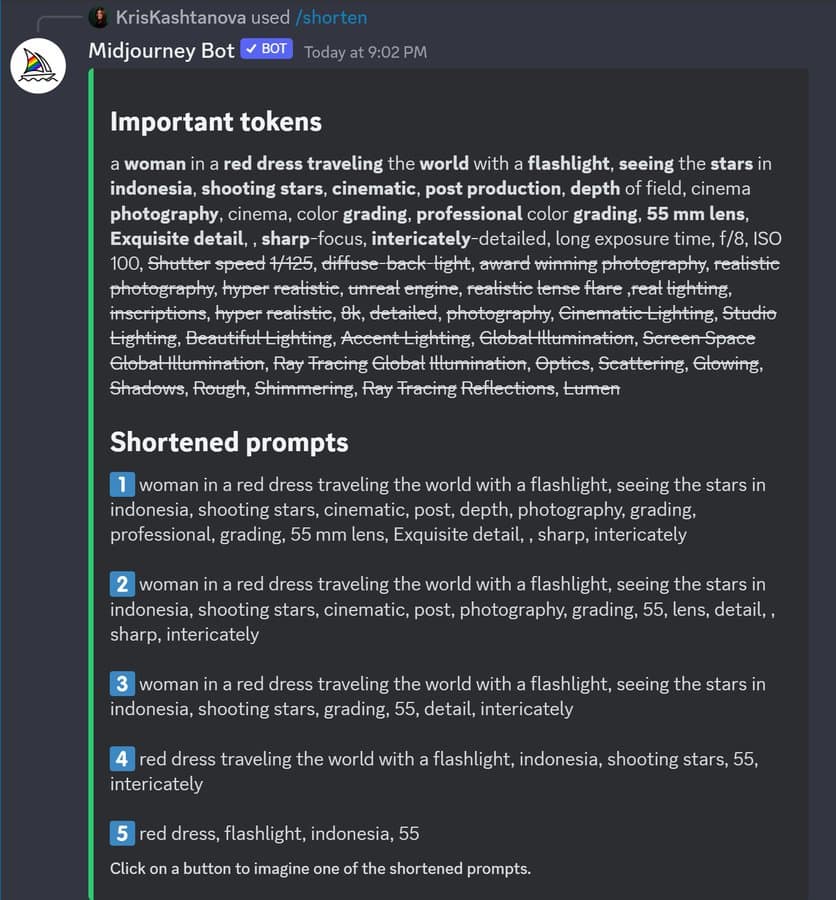

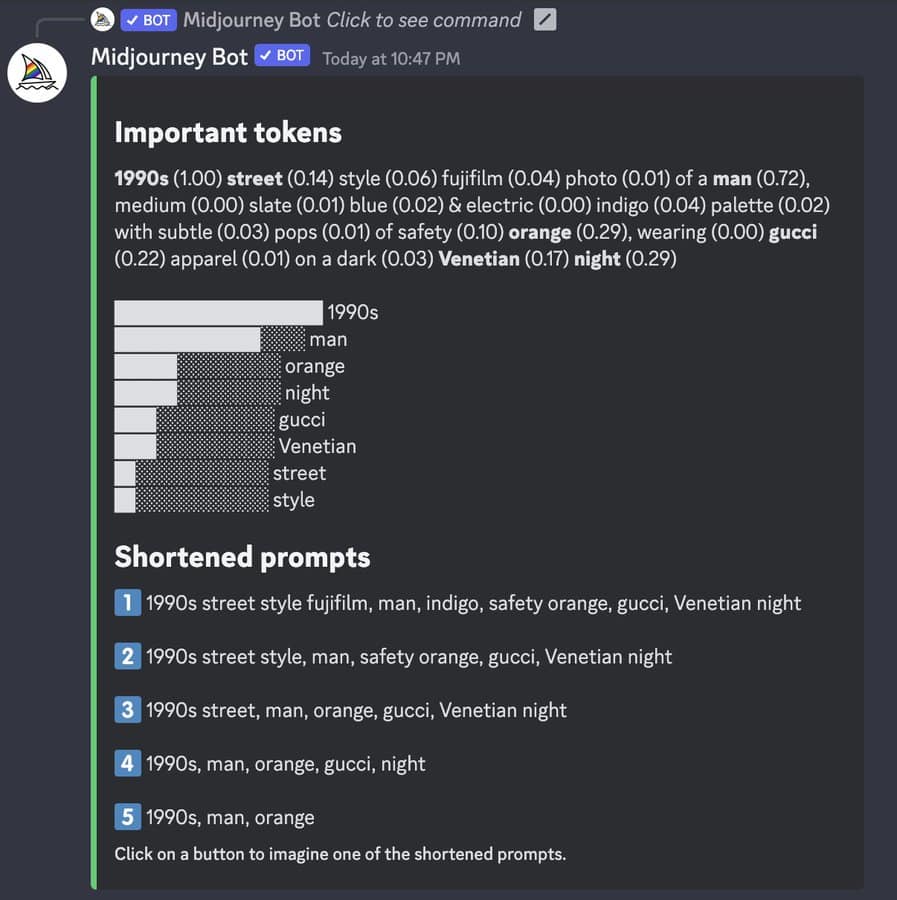

Also, Midjourney 5.2 has introduced a new feature called the prompt parser, making it even easier to generate impressive images. With the command “/shorten [your prompt],” users can now identify important words and eliminate unnecessary ones, optimizing their prompts for the best results. The parser also assigns weights to each word, allowing users to choose from five options for the most suitable prompt. In simpler terms, it helps transform vague ideas into a prompt that better aligns with users’ intentions and needs.

When it comes to process control, Midjourney has been catching up with the competition. However, in terms of user-friendliness, Midjourney falls behind. Those seeking convenience over flexibility may find themselves attracted to Adobe Firefly, a rival platform known for its user-friendly features. On the other hand, Stable Diffusion, with its open-source nature and extensions, offers unparalleled flexibility. Despite StabilityAI’s occasional delays and ambiguous statements like “50% trained” and “will be available in mid-July, but this is inaccurate,” once the weights become available, everything will align smoothly.

With these advancements, Midjourney continues to provide exciting features for easy and efficient image generation. Users can now enjoy more control over their prompts, resulting in better outputs. As technology continues to progress, platforms like Midjourney aim to enhance user experiences and make creative endeavors more accessible to all.

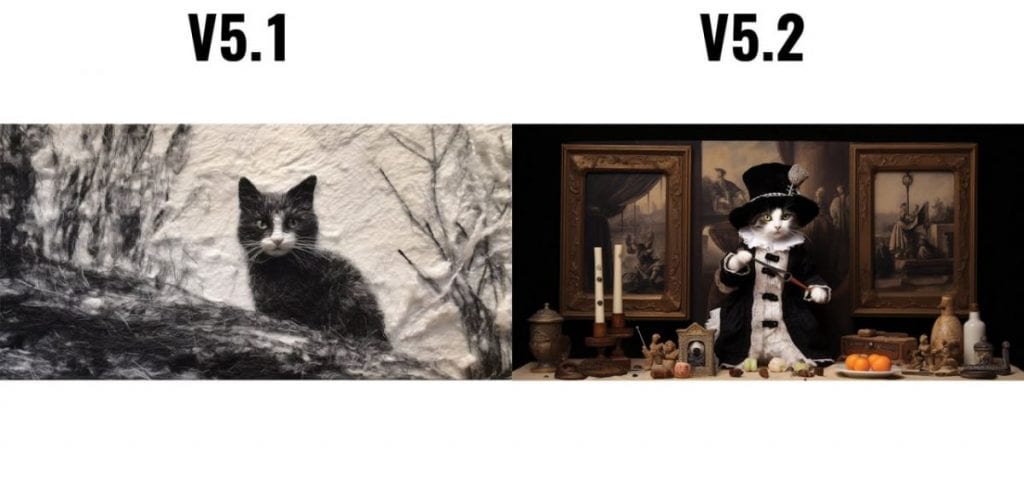

Furthermore, the latest version of Midjourney emphasizes realism, particularly noticeable in art generation. To highlight these enhancements, a comparison was made between a prompt and a seed in Midjourney versions 5.1 and 5.2.

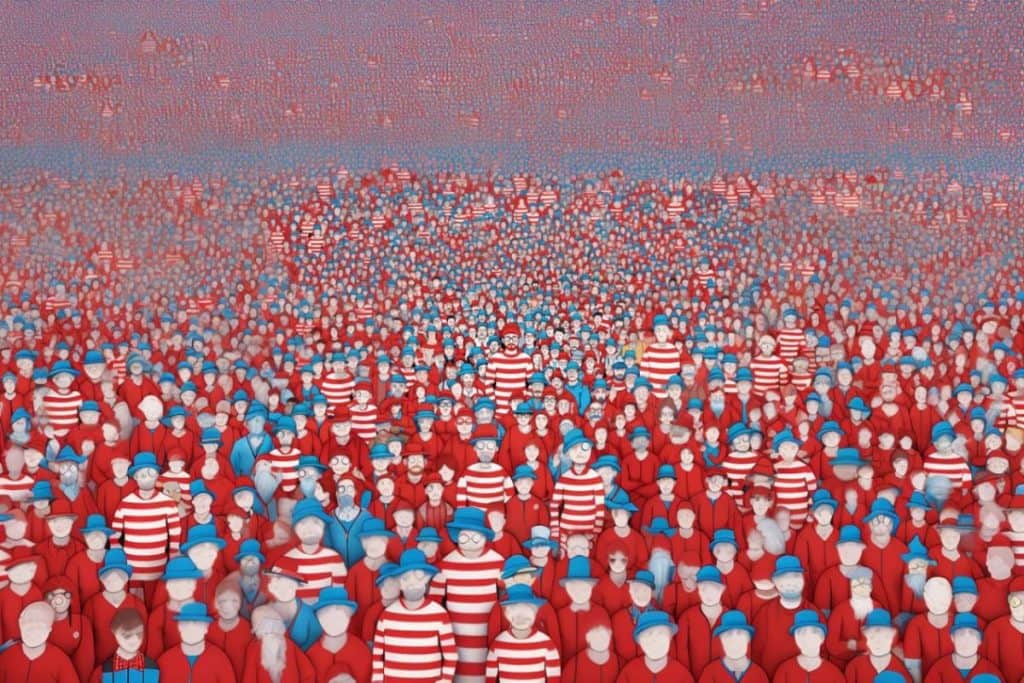

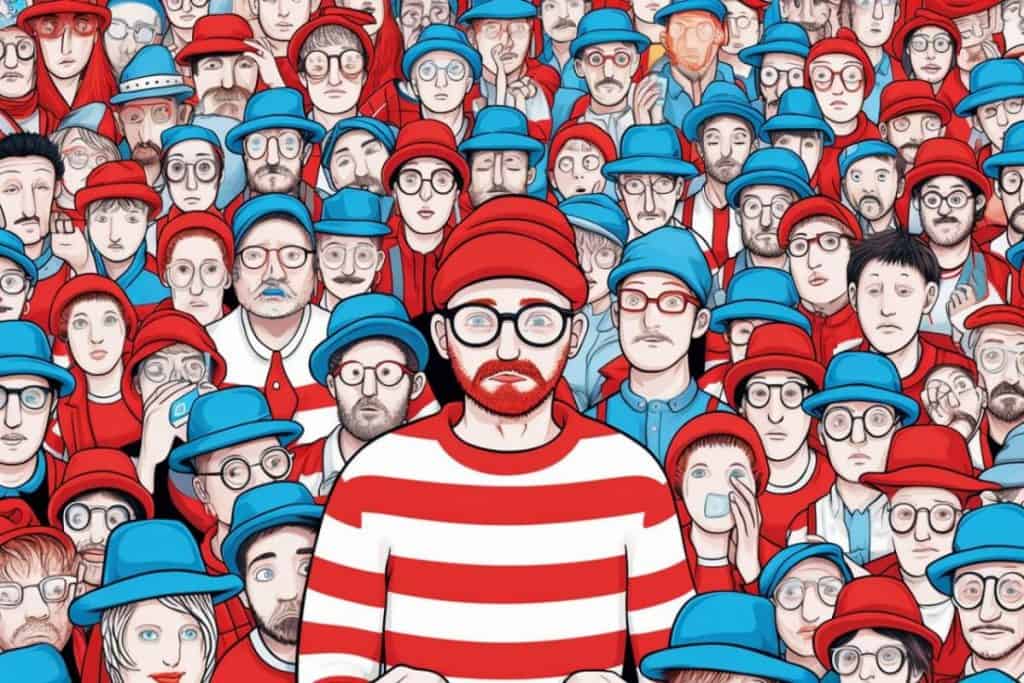

Freshly generated examples by Midjourney 5.2:

New Features in Stable Diffusion SDXL 0.9

StabilityAI has made improvements with the release of their latest model, Stable Diffusion SDXL 0.9. They promise enhanced perception of prompts and improved image detail, allowing users to create more captivating visuals. Even better, you can already try out the new model for free on ClipDrop.

The Stable Diffusion SDXL 0.9 model has garnered attention since its publication by StabilityAI. Though the official confirmation of the release is available, but the link has yet to be provided. In its basic version, the SDXL 0.9 model boasts an impressive 3.5 Bln parameters. Additionally, there are plans for another ensemble of two models, totaling a remarkable 6.6 Bln parameters.

To achieve improved image generation, StabilityAI employs a concatenation of two CLIP models: the base clip from OpenAI and OpenCLIP ViT-G/14. This fusion allows for the generation of more accurate details in the pictures. Paired picture comparisons between the SDXL beta and the new version, SDXL 0.9, clearly demonstrate the substantial improvement in quality.

It is worth noting that utilizing the SDXL 0.9 model during inference requires a video card with at least 16 GB VRAM to ensure smooth performance. As users eagerly await a comprehensive blog post with all the details and the release of the code, there is anticipation for further enhancements and opportunities.

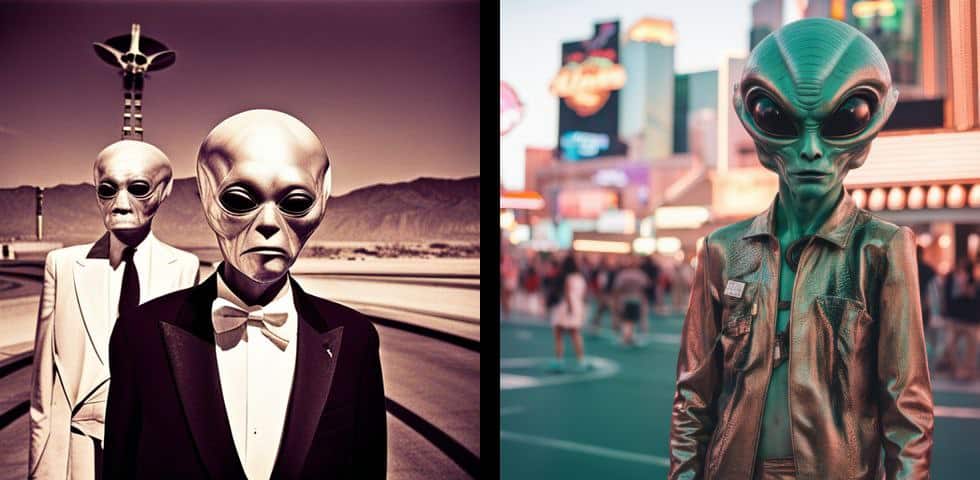

Freshly generated examples by SDXL 0.9:

Read more about AI:

Disclaimer

In line with the Trust Project guidelines, please note that the information provided on this page is not intended to be and should not be interpreted as legal, tax, investment, financial, or any other form of advice. It is important to only invest what you can afford to lose and to seek independent financial advice if you have any doubts. For further information, we suggest referring to the terms and conditions as well as the help and support pages provided by the issuer or advertiser. MetaversePost is committed to accurate, unbiased reporting, but market conditions are subject to change without notice.

About The Author

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.

More articles

Damir is the team leader, product manager, and editor at Metaverse Post, covering topics such as AI/ML, AGI, LLMs, Metaverse, and Web3-related fields. His articles attract a massive audience of over a million users every month. He appears to be an expert with 10 years of experience in SEO and digital marketing. Damir has been mentioned in Mashable, Wired, Cointelegraph, The New Yorker, Inside.com, Entrepreneur, BeInCrypto, and other publications. He travels between the UAE, Turkey, Russia, and the CIS as a digital nomad. Damir earned a bachelor's degree in physics, which he believes has given him the critical thinking skills needed to be successful in the ever-changing landscape of the internet.